UCI-led team uses robots to study brain’s ability to switch between first-person experience and global map

UCI-led team uses robots to study brain’s ability to switch between first-person experience and global map

- November 2, 2022

- Findings, published in PNAS, are helping researchers understand a unique cognitive function with links to memory, navigation

How do we seamlessly switch from one view perspective to another, and what can this

unique cognitive function tell us about memory and autonomous system design? Robot

simulations created in the Cognitive Anteater Robotics Lab (CARL) at UC Irvine are

helping researchers understand the brain’s ability to link between a first-person

experience and a global map. Findings, published in the Proceedings of the National Academy of Sciences, provide insight on brain regions involved in memory and could help improve navigation

systems in autonomous vehicles.

How do we seamlessly switch from one view perspective to another, and what can this

unique cognitive function tell us about memory and autonomous system design? Robot

simulations created in the Cognitive Anteater Robotics Lab (CARL) at UC Irvine are

helping researchers understand the brain’s ability to link between a first-person

experience and a global map. Findings, published in the Proceedings of the National Academy of Sciences, provide insight on brain regions involved in memory and could help improve navigation

systems in autonomous vehicles.

“If I look at the UCI campus map, I can place myself in the specific location where I expect to see certain buildings, crossroads, etc. Going the other way, given some campus buildings around me, I can place myself on the campus map,” says lead author Jinwei Xing, UCI cognitive sciences Ph.D. candidate. “We wanted to better understand how this computation is done in the brain.”

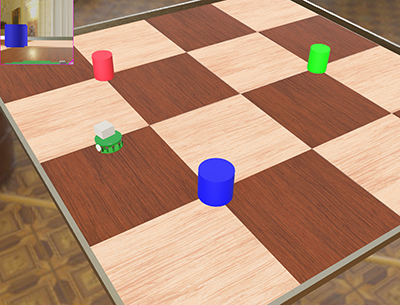

Xing teamed up with Liz Chrastil, UCI neurobiology and behavior assistant professor, Jeff Krichmar, UCI cognitive sciences professor, and Douglas Nitz, UC San Diego cognitive science professor and department chair, to simulate this behavior using a robot equipped with a camera to provide a first-person view. As the robot moved around the lab, an overhead camera acted like a map, providing a top-down view. Using tools from AI and machine learning, the researchers were able to reconstruct the first-person view from the top-down view and vice versa.

“What we discovered in the AI model were things that looked like place cells, head direction cells, and cells responding to objects just like those observed in the brains of humans and rodents,” says Krichmar, who leads the CARL lab. “Perspective switching is something we are constantly doing in our daily lives, but there is little experimental data trying to understand it. Our modeling study suggests a plausible solution to this problem.”

Findings from this work provide interesting predictions about potential brain areas carrying out this behavior, and how view switching might be computed in the brain.

“It certainly has implications for understanding memory and why we get lost, which can be impacted by dementia and Alzheimer’s disease,” says Chrastil. Nitz adds, “And it has implications for drones, robots, and self-driving vehicles. An aerial drone with a top-down bird’s eye view could provide information to a robot on the ground. The robot on the ground could provide valuable information to the aerial drone. For self-driving cars, top-down map information could be incorporated into the car’s navigation and route planning system.”

For next steps, researchers are working to design human and rodent experiments to test the model’s predictions. They’re also planning to incorporate findings into their robotic designs.

Funding for this work was provided by Air Force Office of Scientific Research contract FA9550-19-1-0306, the National Science Foundation Information and Intelligence Systems Robust Intelligence under award number 1813785, and the National Science Foundation Neural and Cognitive Systems Foundations Information and Intelligence Systems under award number 2024633.

-Heather Ashbach, UCI Social Sciences

-pictured: Robot simulation using Webots.

Would you like to get more involved with the social sciences? Email us at communications@socsci.uci.edu to connect.

Share on:

Related News Items

- Dialogues about the practice of science

- Unlocking consciousness in machines: The groundbreaking journey with UC Irvine's Neurorobotics Lab

- Experts say robots might take your restaurant job - and sooner than you think

- UCI-led team uses robots to study brain's ability to switch between first-person experience and global map

- Watch a robot cat chase a robot mouse

connect with us: